A lot of these projects start with some random board in the stash of components and the idea to run RIOT on them. In this instance I dug up my Intel Cyclone 10 FPGA board and gave porting RIOT a shot.

The board in question is a not so beefy FPGA intel fpga board from a chinese vendor with:

- a lot of gpio headers

- Two LEDs

- Two buttons

- USB to UART

- Three seven segment displays

- gigabit ethernet interface

- hdmi.

On the memory side there is a 32MB SDRAM chip and 8MB serial nor flash. Enough to have something.

For this project I picked an existing open RISC-V core and a number of peripherals and combined them into a working proof of concept on the FPGA and got RIOT running on it with decent performance.

FPGA intro

An FPGA is something completely different than the usual microcontroller. An FPGA consists of raw logic blocks that can be configured with combinatorial functions and memory elements. These logic blocks are surrounded by a mesh of programmable interconnects to create larger functions. Most FPGAs also contain some form of DRAM memory bits and hardware multiplier blocks.

‘Programming’ of the FPGA is done via a hardware description language, VHDL and Verilog are the common ones and are widely supported by commercial tools. These tools translate the description into a bitstream that contains the configuration of the logic elements and interconnects.

The elements available on an FPGA can be used to create complex logic circuits in hardware. This includes full processing cores and memory buses.

Tooling around the FPGA is usually proprietary and provided by the vendors with expensive bonus options for advanced analysis. Often the part to synthesise the code into the FPGA-specific bitstream is free, but closed. However there are some community efforts to reverse engineer the bitstream content to get fully open source toolchains around FPGA development.

Another important aspect of FPGA development is simulation. To aid development of FPGA applications, simulation is essential and replaces the task of debuggers of the software counterpart. Simulations are often paired with scripts to test the DUT. Other simulators such as Verilator allow live interaction via simulated UART to the DUT.

SpinalHDL

For this project I decided to go with SpinalHDL , a Scala-based hardware language that generates VHDL or Verilog. Because it generates plain VHDL or Verilog it can be integrated into the existing tooling without much effort.

Main disadvantages of VHDL and Verilog is that they carry around a lot of legacy with them. The languages need a lot of boiler plate code and do not lend well for modern abstraction layers. For example SpinalHDL contains abstraction to implement a memory mapped peripheral device, which can be instantiated later with a real peripheral bus such as one of the AMBA flavors, or Avalon or Wishbone computer bus.

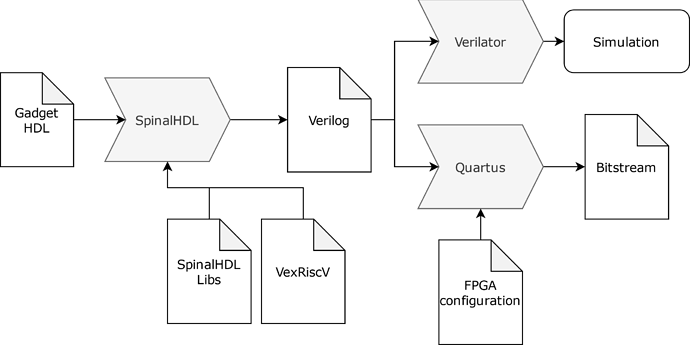

Toolchain Overview

The full toolchain consists of multiple applications. First we have our own code written in SpinalHDL. Using the SpinalHDL tooling with SBT and any external libraries this is converted into Verilog code. The Verilog code can then either be simulated via Verilator, or loaded into the Intel Quartus tool to synthesise it into a bitstream for the FPGA.

SoC

SoC Requirements

To get a fully working RIOT application working on the FPGA system a number of components are required:

-

CPU: For the obvious reason that a core to execute instructions is required.

It needs to have support for the Zicsr extension for the control and status

registers used by RIOT. Interrupts and the

ecallinstruction are also needed to support the thread switching in RIOT. - Interrupt Controller: The interrupt controller can be a separate peripheral. Depending on the RISC-V core there are only a few interrupt lines and all interrupts from peripherals are mapped onto a single CPU interrupt with an separate interrupt controller to query which peripheral triggered the interrupt.

- Memory: A bit of memory is needed to store the application in and use as RAM. For the PoC the FPGA internal RAM bits can be used.

- Debug interface: A debug interface is not a luxury here, it can be used to load the test application and step through the code to inspect the workings of the core.

- Timer: With RIOT a timer is needed relative soon, especially for benchmarking.

Implementation

The implementation of the core can be found on my GitHub. The implementation is roughly based on the examples found in the VexRiscv repository. Added peripherals are based on implementations available in the [SpinalHDL] library.

Most of the design runs at 50 MHz, which is provided by the oscillator on the board. The FPGA contains 4 PLL blocks which can be used to generate different clocks where needed.

CPU

The RISC-V core used is the VexRiscv, a highly configurable RISC-V implementation. The CPU is configured via a list of plugins that define which features are added to the core. Each plugin can be configured separately. Some define extra instructions available, other implement different bus access implementations.

The CPU specific bits of the code are here.

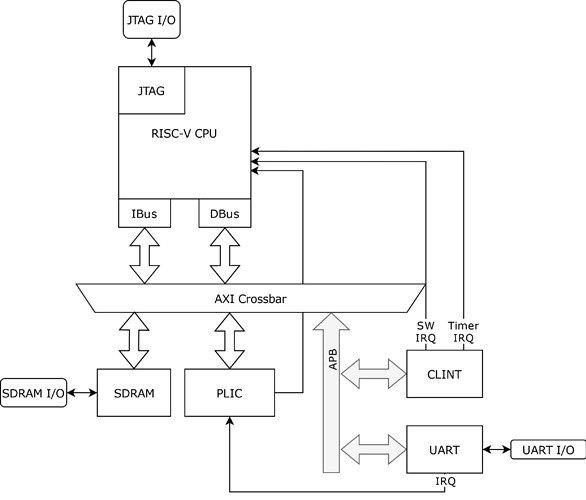

The core used supports three external interrupt signals: The software interrupts, the timer interrupt and the external interrupt. With only these interrupt lines available an external interrupt controller is needed to multiplex the different interrupt sources to the single external interrupt line.

The configuration includes a JTAG debug peripheral which is exposed to external GPIO pins on the FPGA.

Interrupt Controller

For the interrupt controller a simple PLIC-based controller is used. This is the same peripheral used on the SiFive FE310 core and the driver already available in RIOT should work with this implementation.

In SpinalHDL we have to take care to hook the individual interrupt signals from the peripherals to the inputs of the PLIC instance and wire the interrupt output to the external interrupt input of the core.

Memory

The memory currently used is the external SDRAM chip available on the board. This gives access to 32MB of RAM that will be used for both the rom and the ram parts of the RIOT application.

The SDRAM controller itself runs in a faster and separate clock domain to speed up transactions to the memory. To make sure this interfaces correctly with the main bus a clock crossing is needed. SpinalHDL provides a simple clock crossing bus interface that can be inserted into the connection.

As this needed a bit of custom work to get it all hooked up and I couldn’t directly use the implementation available in SpinalHDL, it’s implementation is here The main change compared to the provided SDRAM controller is that I inserted the clock domain crossing in between so that the controller can run at a higher speed.

The main disadvantage of the external RAM is that it is relative slow compared to the internal RAM bits of the FPGA. An instruction and data cache becomes a must to keep the cpu core fed. On the other hand it provides 32MB of RAM, which is more than what we usually have available on a microcontroller.

Timer

A RISC-V core local interruptor (CLINT) is added to act as timer peripheral. This is also a peripheral that is somewhat standardized and available on the SiFive FE310 core. There is already a driver available in RIOT.

The software interrupt of the core is hooked up to the software interrupt output of this peripheral. The same is done with the timer interrupt provided by the peripheral.

The timer peripheral is running at the same 50 MHz clock used throughout the design. For a RIOT timer this is on the fast side and in the future a clock divider could be added to decrease it to 1 MHz.

UART

The UART peripheral used is a simple implementation available from SpinalHDL. The interesting bit here is that the FPGA flexibility can be leveraged to configure it by default with settings. In this case a baud rate of 115200 and the number of start and stop bits. This way the setup and configuration part can be omitted from the RIOT code at the cost of some runtime flexibility.

Bus overview

For the bus interface a system based on the Arm AMBA is used. The main interface to the CPU uses an AXI4 crossbar and an APB3 bridge is added for slower peripherals such as the UART and the timer.

Creation of the AXI crossbar interface is here and the APB3 interface decoder is here. With these interfaces the memory map of the system is defined and the addresses specified here are needed in the RIOT port.

Synthesis

Two versions of the system can be generated. One suitable for synthesis on an FPGA and one suitable for simulation. Running

sbt run

in the root directory of the repo gives the two options.

RIOT port

On the RIOT side things are actually quite trivial as visible with the commit Part of this is because a lot of the hardware is configured with proper defaults from the SpinalHDL. This is especially visible with the UART peripheral where almost no configuration is needed to initialize the peripheral. There is also no clock initialization required.

In the future peripherals could get a clock enable to save power when they are not in use, but this needs modification to the clock domains initialized in the SpinalHDL code.

One thing to keep in mind is that the riscv architecture specified in the RIOT makefiles must match what is provided in the core. In this case a rv32imc core is used, so no atomics supported and these are removed from the port.

Running the system

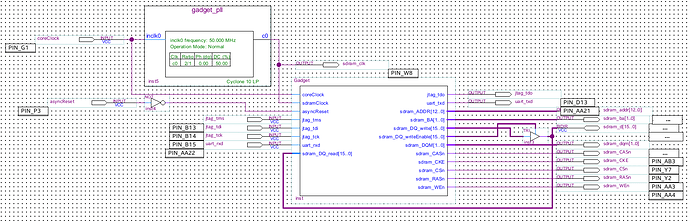

Synthesis of the generated verilog code is done using the Intel Quartus tooling. The verilog code can be imported into a Quartus project. To add the necessary infrastructure around the core, I used a block diagram file:

Because sometimes it is just easier to quickly click things together. The added components are a PLL to generate the 100 MHz clock for the SDRAM and the tri-state buffers used in the SDRAM interface. The rest is just pins exposed to the FPGA.

Synthesis of the whole system shows a usage of 6097 LUTs with 3412 registers used. Not exactly a minimal build, but not suprising given that the interfaces and configuration used is not exactly minimal.

Flashing

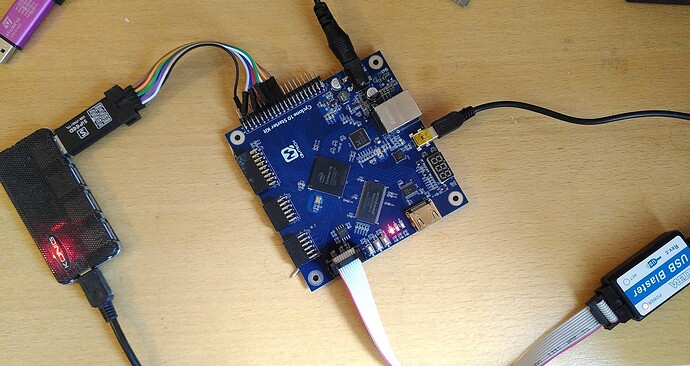

The bitstream generated on the system is deployed on an Intel Cyclone 10 10CL016YU484C8G FPGA:

The Altera USB blaster is used as JTAG interface into the FPGA itself and the SiPeed jtag is hooked up to the GPIO of the RISC-V core.

With a bit of OpenOCD and GDB we get a working debug connection to the core and load the mutex_pingpong test benchmark:

koen@zometeen ~/dev/RIOT-vexriscv $ gdb tests/bench_mutex_pingpong/bin/qmtech_cyclone10/tests_bench_mutex_pingpong.elf

GNU gdb (Gentoo 11.2 vanilla) 11.2

Copyright (C) 2022 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-pc-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<https://bugs.gentoo.org/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

Reading symbols from tests/bench_mutex_pingpong/bin/qmtech_cyclone10/tests_bench_mutex_pingpong.elf...

(gdb) target extended-remote :3333

Remote debugging using :3333

idle_thread (arg=<optimized out>) at /data/riotbuild/riotbase/core/lib/init.c:75

75 /data/riotbuild/riotbase/core/lib/init.c: No such file or directory.

(gdb) load

Loading section .init, size 0x70 lma 0x0

Loading section .text, size 0x2ca4 lma 0x80

Loading section .rodata, size 0x2c0 lma 0x2d24

Loading section .data, size 0xa0 lma 0x2fe4

Start address 0x00000000, load size 12404

Transfer rate: 59 KB/sec, 3101 bytes/write.

(gdb) monitor reset halt

JTAG tap: vexrisc_ocd.bridge tap/device found: 0x10001fff (mfg: 0x7ff (<invalid>), part: 0x0001, ver: 0x1)

(gdb) cont

Continuing.

And with a separate serial console we can watch the output of the benchmark:

koen@zometeen ~/dev/RIOT-vexriscv $ ~/.local/bin/pyserial-miniterm /dev/ttyUSB0 115200 --eol LF

--- Miniterm on /dev/ttyUSB0 115200,8,N,1 ---

--- Quit: Ctrl+] | Menu: Ctrl+T | Help: Ctrl+T followed by Ctrl+H ---

main(): This is RIOT! (Version: 2022.07-devel-86-gb50b8-wip/vexriscv)

main starting

{ "result" : 67814, "ticks" : 737 }

{ "threads": [{ "name": "idle", "stack_size": 256, "stack_used": 192 }]}

{ "threads": [{ "name": "main", "stack_size": 1280, "stack_used": 472 }]}

With this we have an application directly running on the softcore system of the FPGA with UART output. Performance is around 80% of the nRF52840 core, which is decent for something thrown together in a relative short amount of time.

(Potential) Future Work

One issue with the fork is that it doesn’t really have the configurability that we get with the softcore. Both the features enabled by the core itself and the peripherals attached are fully configurable.

For the core this means that RIOT needs to be aware which instruction set extensions are enabled. Most of the other settings there are transparent for RIOT, as configuration changes in the pipeline of the core do not change the generated code.

On the peripheral side the main challenge lies with the memory map of the SoC. RIOT would need a way to know about the memory map of the SoC with the attached peripheral types. This would have to include the amount of memory and flash available. One potential solution is to integrate a generator for System View Description files in the FPGA tooling. The LiteX framework already contains a function to generate these files for firmware.

The more interesting part of this is that the core can be extended with custom peripherals, anything between crypto-accelerators and full audio/video processing pipelines. The system is also not limited to only a single processing core, multiple could be added to experiment with SMP or running multiple RIOT instances cooperatively. The VexRiscv core is also flexible enough to add custom instructions, the bit manipulation instruction extensions for RISC-V a possible low hanging fruit.

Different configurations

During development I played around with different configurations but no serious benchmarking attempt. I noticed a few things though.

The VexRiscv core uses pipelining to achieve high instruction throughput. A full pipeline in the core is really beneficial to performance and one thing influencing this is the branch prediction algorithm used. I noticed quite a big difference in performance between the different branch predictors available in the VexRiscv core.

The other thing is that the external SDRAM is relative slow. Performance was abysmal when I combined the SDRAM with a simple, non-caching instruction and data interface on the core. A few KiB of cache on both interfaces resolved this.

Let me know if you have any questions or areas that I should elaborate a bit more on!